“In February 2002, a Predator tracked and killed a tall man in flowing robes along the Pakistan-Afghanistan border. The CIA believed it was firing at Bin Laden, but the victim turned out to be someone else.”

—The Los Angeles Times, January 24, 2006

“American government officials said one of the people in the group was tall and was being treated with deference by those around him. That gave rise to speculation that the attack might have been directed at Osama Bin Laden, who is 6-feet-4.”

—The New York Times, February 17, 2002

Is the United States military trained to interpret images? Have Predator pilots read Sontag, Svetlana Alpers, C S Pierce? As the decision to kill is increasingly based on images relayed from autonomous drones, one can’t help but ponder what kind of training the military receives in interpreting visual media.

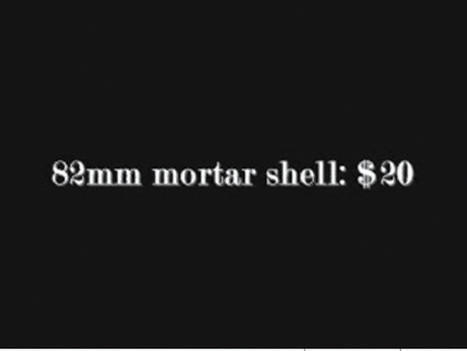

War by robot proxy: America is building a world in which its citizens won’t ever actually have to go to battle. Policies and associated technologies are engineered to prevent Americans from experiencing war firsthand. Though journalists once acted as civilian proxies, something changed with the war in Vietnam, as the military began to view domestic opposition to war as a kind of enemy at home, and so through exclusion, intimidation, and fastidious embedding, has successfully kept them far from the realities of the field. And in fact, soldiers themselves are increasingly replaced by prosthetic technologies; remotely operated machines are playing the roles once played by humans. The Department of Defense is projected to spend nearly $3 billion, in 2008 alone, for Unmanned Aerial Vehicles such as the Predator, a spindly, medium-altitude, long-range aircraft developed after the first Persian Gulf War. These relatively inexpensive ($4 million) airplanes, usually piloted remotely from halfway around the world, can stay airborne for an entire day at a time. Originally marketed as surveillance devices, they were first used for targeted assassination purposes in Yemen, in 2002. To add to their formidable entourage, the US military most recently purchased SWORDs, 80 lb robots that can be outfitted with machine guns and grenade and rocket launchers. A human soldier can operate the robot from several thousand feet away, simply by watching a video transmission from the robot and controlling it remotely.

While these systems currently require human involvement — watching a blinking screen of pulsating pixels, making choices based on split-second interpretations — increasingly, autonomous software will be used to make even the biggest of decisions. Says Colonel Tom Ehrhard of the US Air Force, “Flight automation is riding the wave of Moore’s law, and it gets increasingly sophisticated. Automation will make it so that even adaptations in flight in combat will be made independently of human input.” In the Pentagon’s vision of the future, it will be software engineers who decide who gets to live or die, preserving their ethical choices in code that’s executed later.

Images taken from a Predator’s camera are circulated in a network of soldiers, spies, analysts, and pilots. In the few telemetry videos from Predators that have made their way to the internet (most often onto YouTube, probably leaked by the military itself), a half-dozen voices are overheard in urgent teleconferenced debates on what they are seeing, how to respond, when to fire, and at what. Sequestered air-conditioned workspaces are the new front, where the cubicle meets the cockpit.

In February of 2002, the image of Daraz Khan, 5’11”, walking near Khost, Afghanistan, looking for scrap metal to sell, wended its way up 15,000 feet to a Predator; was sucked into a massive lens on a computer-stabilized gimbal, was projected onto a Forward Looking Infra Red near-field focal plane array, was piped through an analog-to-digital converter, through microcontrollers and computers and then a spread-spectrum modem, up to a satellite, then down to ground station computers, perhaps in Virginia or Germany; and was finally displayed on a flat-panel monitor studied by human eyes.

We have little reference for understanding images of this nature; they are like a documentary, in that they offer a view, with implicit and explicit perspective, of a nonfiction event. They are also like computer games, in that the viewer is meant to interfere in that event, engage with it. But drone-generated video is unlike documentary or video games — or indeed any other visual media — in that the decisions made based upon them are both immediate and, increasingly, fatal.

These newly mediated images of war are like old ones in that they are hermeneutic; freshman art history students are taught the interpretability of images, and by the time they’re seniors, they’ll have learned that photographic evidence is only as credible as the host of experts called in to explain it. By the time they’re seniors, they’ll know that for decades after its invention, photography was leveraged to prove the existence of ghosts. In 1869 a Boston photographer was taken to court for selling ghost photographs; experts were called in on both sides, though the judge dismissed the case altogether for lack of evidence. Dozens of internet sites, to this day, give tips on how to take (digital!) images of ghosts. Why is it, one might ask, that only humanists are trained in this history, never engineers or soldiers?

One particular cockpit video that swept the web in 2004 portrayed the obliteration of a large group of men in Fallujah, based on no apparent information other than that they were men, and walking out of a mosque. Do the millions of dollars of imaging equipment used to make this film present a neutral image? Is the suspicion inherent in the taking of these images not transmitted through the apparatus to the viewer? The novelist Max Frisch once said, “Technology is the knack of so arranging the world that we don’t have to experience it.” After seeing the real-time video of the Falluja bomb obliterating a score of anonymous pedestrians, the overwhelmed weapons operator simply sighed, “Oh, dude.” He was flying blind.